You are here

MariaDB Master/Master GTID based Replication with keepalived VIP

Important: FromDual does NOT recommend to use an automated VIP failover technology as described below for a MariaDB/MySQL Master/Master Replication. In such a topology we recommend a manual VIP failover with prepared scripts!

Some of our customers still want to have old-style MariaDB Master/Master Replication Clusters. Time goes by, new technologies appear but some old stuff still remains.

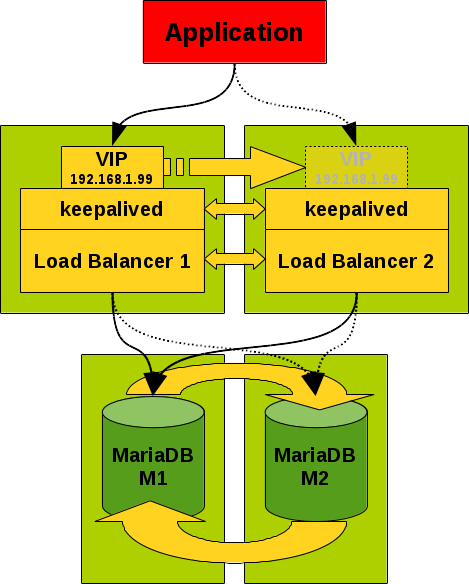

The main problem in a Master/Master Replication set-up is to make the service highly available for the application (applications typically cannot deal with more than one point-of-contact). This can be achieved with a load balancer (HAproxy, Galera Load Balancer (GLB), ProxySQL or MaxScale) in front of the MariaDB master/master replication cluster. But the load balancer by it-self should also become highly available. And this is typically achieved by a virtual IP (VIP) in front of one of the load balancers. To make operations of the VIP more handy the VIP is controlled by a service like keepalived or corosync.

Figure 1: MariaDB Master/Master replication set-up with load balancer and keepalived

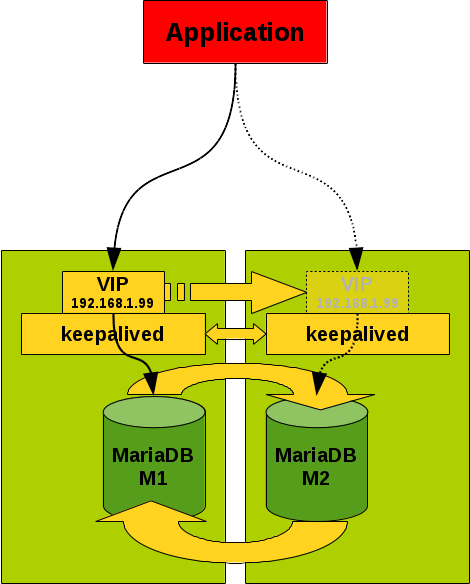

Because I like simple solutions (I am a strong believer in the KISS principle) I thought about avoiding the load balancer in the middle and attach the VIP directly to the master/master replication servers and let them to be controlled by keepalived as well.

Figure 2: MariaDB Master/Master replication set-up with keepalived

Important: A master/master replication set-up is vulnerable to split-brain situations. Neither keepalived nor the master/master replication helps you to avoid conflicts and in any way to prevent this situation. If you are sensitive to split-brain situations you should look for Galera Cluster. Keepalived is made for stateless services like load balancers, etc. but not databases.

Set-up a MariaDB master/master replication cluster

Because most of the Linux distributions have a bit old versions of software delivered we use the MariaDB 10.2 repository from the MariaDB website:

# # /etc/yum.repos.d/MariaDB-10.2.repo # # MariaDB 10.2 CentOS repository list - created 2017-11-08 20:32 UTC # http://downloads.mariadb.org/mariadb/repositories/ # [mariadb] name = MariaDB baseurl = http://yum.mariadb.org/10.2/centos7-amd64 gpgkey=https://yum.mariadb.org/RPM-GPG-KEY-MariaDB gpgcheck=1

Then we install the MariaDB server and start it:

shell> yum makecache shell> yum install MariaDB-server MariaDB-client shell> systemctl start mariadb shell> systemctl enabled mariadb

For the MariaDB master/master replication set-up configuration we use the following parameters:

# # /etc/my.cnf # [mysqld] server_id = 1 # 2 on the other node log_bin = binlog-m1 # binlog-m2 on the other node log_slave_updates = 1 gtid_domain_id = 1 # 2 on the other node gtid_strict_mode = On auto_increment_increment = 2 auto_increment_offset = 1 # 2 on the other node read_only = On # super_read_only for MySQL 5.7 and newer

Then we close the master/master replication ring according to: Starting with empty server.

mariadb> SET GLOBAL gtid_slave_pos = "";

mariadb> CHANGE MASTER TO master_host="192.168.56.101", master_user="replication"

, master_use_gtid=current_pos;

mariadb> START SLAVE;

Installing keepalived

Literature:

The next step is to install and configure keepalived. This can be done as follows:

shell> yum install keepalived shell> systemctl enable keepalived

Important: In my tests I got crashes and core dumps with keepalived which disappeared after a full upgrade of CentOS 7.

Configuring keepalived

The most important part is the keepalived configuration file:

#

# /etc/keepalived/keepalived.conf

#

global_defs {

notification_email {

root@localhost

dba@example.com

}

notification_email_from root@master1 # master2 on the other node

smtp_server localhost 25

router_id MARIADB_MM

enable_script_security

}

# Health checks

vrrp_script chk_mysql {

script "/usr/sbin/pidof mysqld"

weight 2 # Is relevant for the diff in priority

interval 1 # every ... seconds

timeout 3 # script considered failed after ... seconds

fall 3 # number of failures for K.O.

rise 1 # number of success for OK

}

vrrp_script chk_failover {

script "/etc/keepalived/chk_failover.sh"

weight -4 # Is relevant for the diff in priority

interval 1 # every ... seconds

timeout 1 # script considered failed after ... seconds

fall 1 # number of failures for K.O.

rise 1 # number of success for OK

}

# Main configuration

vrrp_instance VI_MM_VIP {

state MASTER # BACKUP on the other side

interface enp0s9 # private heartbeat interface

priority 100 # Higher means: elected first (BACKUP: 99)

virtual_router_id 42 # ID for all nodes of Cluster group

debug 0 # 0 .. 4, seems not to work?

unicast_src_ip 192.168.56.101 # Our private IP address

unicast_peer {

192.168.56.102 # Peers private IP address

}

# For keepalived communication

authentication {

auth_type PASS

auth_pass Secr3t!

}

# VIP to move around

virtual_ipaddress {

192.168.1.99/24 dev enp0s8 # public interface for VIP

}

# Check health of local system. See vrrp_script above.

track_script {

chk_mysql

# If File /etc/keepalived/failover is touched failover is triggered

# Similar can be reached when priority is lowered followed by a reload

chk_failover

}

# When node becomes MASTER this script is triggered

notify_master "/etc/keepalived/keepalived_master.sh --user=root --password= --wait=yes --variable=read_only"

# When node becomes SLAVE this script is triggered

notify_backup "/etc/keepalived/keepalived_backup.sh --user=root --password= --kill=yes --variable=read_only"

# Possibly fault and stop should also call keepalived_backup.sh to be on the safe side...

notify_fault "/etc/keepalived/keepalived_fault.sh arg1 arg2"

notify_stop "/etc/keepalived/keepalived_stop.sh arg1 arg2"

# ANY state transit is triggered

notify /etc/keepalived/keepalived_notify.sh

smtp_alert # send notification during state transit

}

Listing 6: keepalived configuration for Master/Master VIP failover

With the command:

shell> systemctl restart keepalived

the service is started and/or the configuration is reloaded.

The scripts we used in the configuration file are the following:

chk_failover.sh keepalived_backup.sh keepalived_fault.sh keepalived_master.sh keepalived_notify.sh keepalived_stop.sh

#!/bin/bash

#

# /etc/keepalived/keepalived_notify.sh

#

TYPE=${1}

NAME=${2}

STATE=${3}

PRIORITY=${4}

TS=$(date '+%Y-%m-%d_%H:%M:%S')

LOG=/etc/keepalived/keepalived_notify.log

echo $TS $0 $@ >>${LOG}

#!/bin/bash

#

# /etc/keepalived/chk_failover.sh

#

/usr/bin/stat /etc/keepalived/failover 2>/dev/null 1>&2

if [ ${?} -eq 0 ] ; then

exit 1

else

exit 0

fi

To make MariaDB master/master replication more robust against replication problems we took the following (configurable) actions on the database side:

Getting the MASTER role:

- Waiting for catch-up replication

- Make the MariaDB instance read/write

Getting the BACKUP role:

- Make the MariaDB instance read-only

- Kill all open connections

Testing scenarios

The following scenarios where tested under load (insert_test.sh):

- Intentional failover for maintenance:

shell> touch /etc/keepalived/failover shell> rm -f /etc/keepalived/failover

- Stopping keepalived:

shell> systemctl stop keepalived shell> systemctl start keepalived

- Stopping MariaDB node:

shell> systemctl stop mariadb shell> systemctl start mariadb

- Reboot server:

shell> reboot

- Simulation of split-brain:

shell> ip link set enp0s9 down shell> ip link set enp0s9 up

Problems

Problems we faced during set-up and testing were:

- SElinux/AppArmor

- Firewall

Keepalived controlling 2 virtual IPs

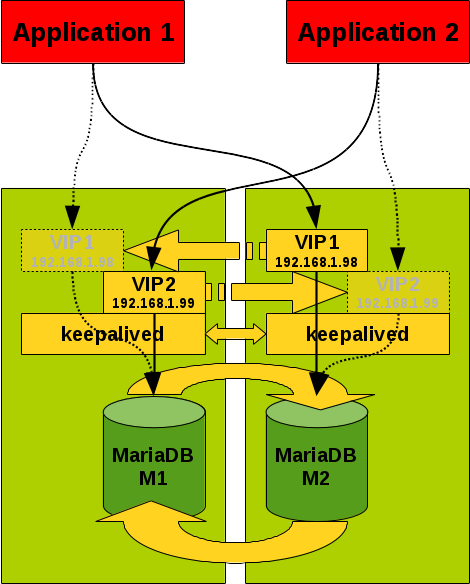

A second scenario we wanted to build is a MariaDB master/master GTID based replication cluster with 2 VIP addresses. This is to achieve either a read-only VIP and a read/write VIP or to have half of the load on one master and half of the load on the other master:

Figure 3: MariaDB Master/Master replication set-up with keepalived and 2 VIPs

For this scenario we used the same scripts but a slightly different keepalived configuration:

#

# /etc/keepalived/keepalived.conf

#

global_defs {

notification_email {

root@localhost

dba@example.com

}

notification_email_from root@master1 # master2 on the other node

smtp_server localhost 25

router_id MARIADB_MM

enable_script_security

}

# Health checks

vrrp_script chk_mysql {

script "/usr/sbin/pidof mysqld"

weight 2 # Is relevant for the diff in priority

interval 1 # every ... seconds

timeout 3 # script considered failed after ... seconds

fall 3 # number of failures for K.O.

rise 1 # number of success for OK

}

vrrp_script chk_failover {

script "/etc/keepalived/chk_failover.sh"

weight -4 # Is relevant for the diff in priority

interval 1 # every ... seconds

timeout 1 # script considered failed after ... seconds

fall 1 # number of failures for K.O.

rise 1 # number of success for OK

}

# Main configuration

vrrp_instance VI_MM_VIP1 {

state MASTER # BACKUP on the other side

interface enp0s9 # private heartbeat interface

priority 100 # Higher means: elected first (BACKUP: 99)

virtual_router_id 42 # ID for all nodes of Cluster group

unicast_src_ip 192.168.56.101 # Our private IP address

unicast_peer {

192.168.56.102 # Peers private IP address

}

# For keepalived communication

authentication {

auth_type PASS

auth_pass Secr3t!

}

# VIP to move around

virtual_ipaddress {

192.168.1.99/24 dev enp0s8 # public interface for VIP

}

# Check health of local system. See vrrp_script above.

track_script {

chk_mysql

chk_failover

}

# ANY state transit is triggered

notify /etc/keepalived/keepalived_notify.sh

smtp_alert # send notification during state transit

}

vrrp_instance VI_MM_VIP2 {

state BACKUP # MASTER on the other side

interface enp0s9 # private heartbeat interface

priority 99 # Higher means: elected first (MASTER: 100)

virtual_router_id 43 # ID for all nodes of Cluster group

unicast_src_ip 192.168.56.101 # Our private IP address

unicast_peer {

192.168.56.102 # Peers private IP address

}

# For keepalived communication

authentication {

auth_type PASS

auth_pass Secr3t!

}

# VIP to move around

virtual_ipaddress {

192.168.1.98/24 dev enp0s8 # public interface for VIP

}

# Check health of local system. See vrrp_script above.

track_script {

chk_mysql

chk_failover

}

# ANY state transit is triggered

notify /etc/keepalived/keepalived_notify.sh

smtp_alert # send notification during state transit

}

Listing 16: keepalived configuration for Master/Master VIP failover

- Shinguz's blog

- Log in or register to post comments